You are here

Looking Inside Google Glass

Google Glass is gaining more interest. The product will not be officially available to consumers until early 2014. However, devices have been delivered to developers.

CNET got its hands on one for a teardown. Except the teardown didn’t go much further than a few pieces that could be easily removed. To get to the processor or memory would require destroying the plastic housing. At a retail price of about $1,500 that is not likely to happen. What has been figured out comes from Google’s specs, software developers and debugging tools.

According to Google, Glass has a high resolution display. The camera is 5 megapixel and the video is 720p. Connectivity is 802.11 b/g Wi-Fi and Bluetooth. Total Flash memory is 16 GB of which 12 GB is available to the user. It has a micro USB cable and charger.

What has been determined is that the main processor is the Texas Instruments OMAP 4430 with 1 GB of RAM. TI first targeted OMAP for the smartphone market. It has lost market share to other chip vendors, notably Qualcomm. However, TI has refocused OMAP on a broader market, including consumer. Amazon uses the TI OMAP 4430 in the Kindle Fire.

Android developers note that there are 16 sensors which are part of the Google Android Sensor Manager system. These sensors are used by apps developers. They are listed:

- MPL Gyroscope

- MPL Accelerometer

- MPL Magnetic Field

- MPL Orientation

- MPL Rotation Vector

- MPL Linear Acceleration

- MPL Gravity

- LTR-506ALS Light sensor

- Rotation Vector Sensor

- Gravity Sensor

- Linear Acceleration Sensor

- Orientation Sensor

- Corrected Gyroscope Sensor

And location providers:

- Network

- Passive

- GPS

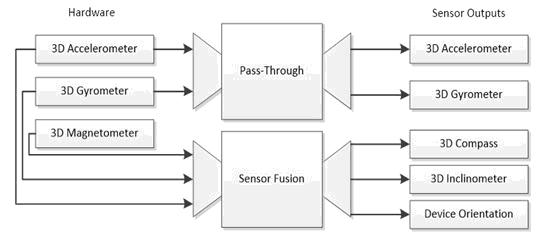

It appears that these are not all physical sensors. The acronym “MPL” refers to “Motion Processing Library”. It is part of the Google Android Sensor Manager system. The sensor fusion algorithm, which may be either in a sensor hub controller or in the TI OMAP, converts the sensor data into information for the OS and APIs. Figure 1 shows conceptually how this works using a 9-axis sensor fusion solution. The blocks on the right side, labeled “Sensor Outputs”, are essentially “virtual sensors” which the apps developers work with. Therefore, Semico believes that there are fewer actual sensors. For example, orientation and linear acceleration can be derived from the accelerometer. It appears that Google Glass uses MEMS sensors for the accelerometer, gyroscope and magnetometer in a sensor fusion solution along with other non-MEMS sensors.

Interfacing Sensors to OS and APIs via Sensor Fusion

Source: Geek.com and Microsoft

Semico has examined the development of sensor fusion and the different ways it can be implemented. The report “MEMS and Controllers: Dynamics of Competition” focuses on MEMS and the controllers that form the basis for sensor fusion.

Google is packing quite a bit of technology into a small package. Of course there is a high price to pay for all of this technology. Over time with high volumes one would expect the price to come down. The wide array of sensors and the sensor fusion algorithm are important enabling technologies for Google Glass. Developers will be able to implement innovative apps for augmented reality, motion tracking and more.

For more information on my report “MEMS and Controllers: Dynamics of Competition”, contact Rick Vogelei.

Add new comment